Python OpenCV Project – Motion Detection Squid Game

FREE Online Courses: Click for Success, Learn for Free - Start Now!

Introducing the OpenCV Motion Detection Squid Game! You’ll experience a special difficulty in this game that is based on the well-known TV show “Squid Game.” Using your webcam, the game tracks your hand movements in real-time. But here’s the twist: when a red light appears, your movements will be frozen, and you can’t move your hand. However, when a green light illuminates, you’re free to move your hand and complete various challenges.

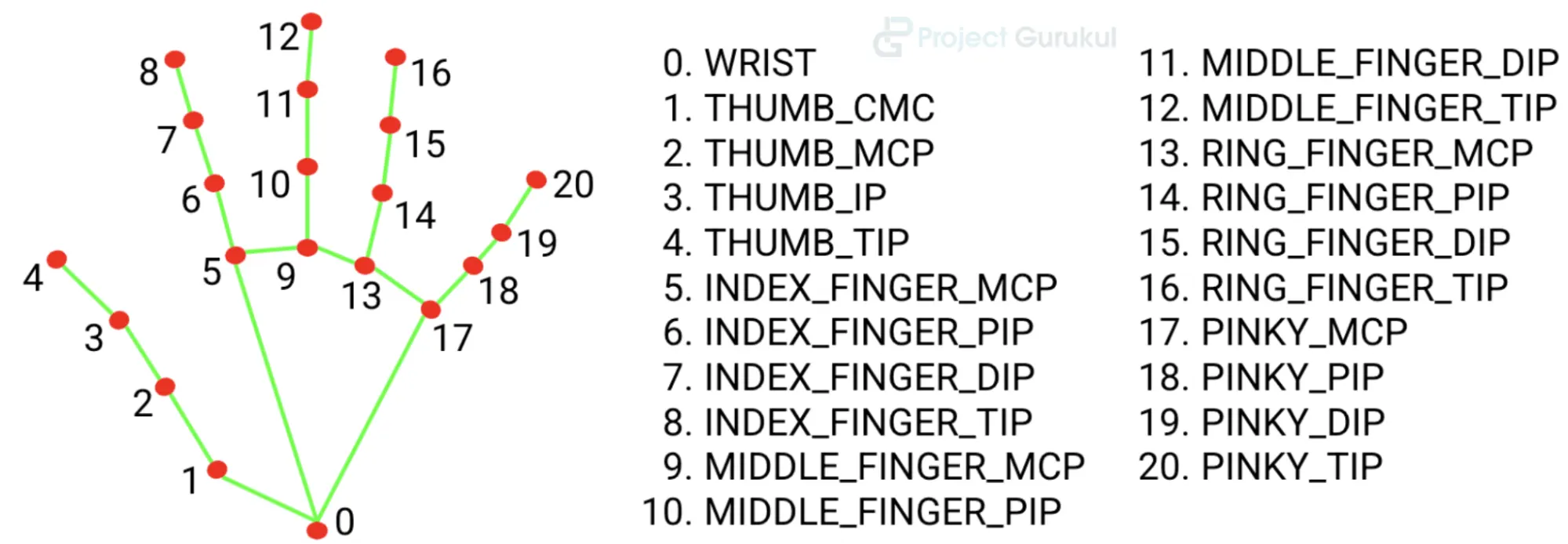

Hand Detection

Hand detection in the Motion Detection Squid Game is accomplished using the Mediapipe library. The game utilizes a webcam to capture video frames, which are then processed by the Hands module of Mediapipe. This module analyzes the frames to identify and track hand landmarks, such as fingertips, knuckles, and the wrist. The detection algorithm identifies these landmarks as 3D coordinates in the image space. This allows the game to determine the position and movement of the player’s hands throughout the game.

Motion Detection

The code uses the OpenCV library to detect hand movement in the Motion Detection Squid Game. It compares the current position of the hand with the previous position to see if there is any significant change. Players will face the threat of elimination if there is movement detected and the light is red, while the player will stay alive even though hand movement is detected in green light.

MediaPipe

MediaPipe is a powerful computer vision framework developed by Google. It provides pre-built models that can detect various objects, including hands. By utilizing the hand detection model, we can create unique applications that allow users to interact with digital content using their hands alone, without the need for additional input devices.

The model accurately identifies the position of the user’s hand and provides coordinate data to track its movement and gestures. This technology has the potential to revolutionize our interaction with digital devices, providing a more intuitive and captivating user experience.

How can hand movements be used to perform different challenges in the virtual squid game?

In the virtual Squid Game, your hand movements are crucial for tackling various challenges. The game uses OpenCV to track and understand how you move your hand, creating an interactive and captivating experience. You’ll encounter tasks like mimicking specific gestures, using precise hand-eye coordination to catch or deflect virtual objects or tracing shapes in the air. These challenges test your motor skills, timing, and accuracy, providing an exciting gameplay experience that relies on your physical movements. By integrating hand movements and OpenCV technology, the virtual Squid Game offers a unique and natural way for you to navigate through the game and achieve success.

What kind of challenge will be given by this game to the player?

The player is challenged to control the character’s movement using hand gestures captured by the camera. The player needs to show their full hand in front of the camera and make specific finger and hand movements to control the character. The objective of the game is to navigate the character from the start point to the finish point on the screen. If the player successfully reaches the finish point, they are declared the winner. The game provides a fun and interactive challenge that tests the player’s hand-eye coordination and responsiveness.

How does the game use hand movement detection to complete the challenge?

It uses the camera to detect and track the player’s hand movements. It analyzes the hand gestures in real-time to control a character on the screen. The player’s objective is to guide the character from the starting point to the finish point while avoiding obstacles. By moving their hand in specific ways, the player can make the character move accordingly. The program provides visual feedback by combining the live camera feed with the game window. It tests the player’s coordination, responsiveness, and ability to control the character using hand gestures.

Prerequisites for Motion Detection Squid Game using Python OpenCV

A strong grasp of the Python programming language and familiarity with the OpenCV library are essential prerequisites. Additionally, meeting the following specified system requirements is crucial for optimal performance.

- Python 3.7 (64-bit) and above

- Any Python editor (VS code, Pycharm)

Download Python OpenCV Motion Detection Squid Game Project

Please download the source code of Python OpenCV Motion Detection Squid Game Project: Python OpenCV Motion Detection Squid Game Project Code.

Installation

Open Windows cmd as administrator

1. To install the opencv library, run the command from the cmd.

pip install opencv-python

2. To install the media pipe library, run the command from the cmd.

pip install mediapipe

Let’s Implement

To implement this, follow the below steps.

1. We need to import some libraries that will be used in our implementation.

import cv2 import mediapipe as mp import numpy as np import time import math import random

2. The code initializes the hand detection module and creates an instance to detect hands. It also imports drawing utilities for working with hand-related tasks.

mpHands = mp.solutions.hands hands = mpHands.Hands(max_num_hands=1, min_detection_confidence=0.8) mpDraw = mp.solutions.drawing_utils

3. It is used to open the integrated camera of a laptop.

cap = cv2.VideoCapture(0)

4. In this, prev_landmarks keeps track of previous positions, isAlive indicates if a character is alive, start_time captures the starting time, duration represents the time span, start_position is the initial position, finish_position is the target position, char_position tracks the current x-coordinate, char_speed is the movement speed, and hand_detected shows if a hand has been detected in a vision system.

prev_landmarks = None isAlive = False start_time = time.time() duration = random.uniform(0, 5) start_position = (20, 800) finish_position = (690, 650) char_position = start_position[0] char_speed = 3 hand_detected = False

5. The code loads two images, ‘green.png’ and ‘red.png’, using OpenCV. The variable ‘currWindow’ is assigned the value of the ‘im1’ image, which is ‘green.png’.

im1 = cv2.imread('green.png')

im2 = cv2.imread('red.png')

currWindow = im1

6. Start While loop.

while True:

7. It captures a video frame, checks its success, obtains its shape, flips it horizontally, converts its color space to RGB, processes it is using a hands model, and stores the result for hand-related tasks.

ret, frame = cap.read() x, y, c = frame.shape frame = cv2.flip(frame, 1) framergb = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB) result = hands.process(framergb

8. It checks if a certain amount of time has passed, and if so, it switches between two image windows at random intervals, creating a dynamic visual display.

if time.time() - start_time >= duration:

if currWindow is im1:

currWindow = im2

else:

currWindow = im1

start_time = time.time()

duration = random.uniform(3, 8)

9. It checks for detected hand landmarks in the result. If landmarks are found, it sets hand_detected to true, retrieves the landmarks for the first detected hand, and visualizes them on the frame.

if result.multi_hand_landmarks:

hand_detected = True

hand_landmarks = result.multi_hand_landmarks[0]

mpDraw.draw_landmarks(frame, hand_landmarks, mpHands.HAND_CONNECTIONS)

10. It compares the movement of fingers and hands between the current and previous frames. It calculates the distance of finger and hand movement based on landmark positions. If the finger or hand movement exceeds certain thresholds and the current window is a specific image, it updates the character’s position or sets it as alive. If the movement conditions are not met, it prints a message indicating no hand movement. The current hand landmarks are stored for the next iteration.

if prev_landmarks is not None:

prev_finger_pos = np.array([(lm.x * x, lm.y * y) for lm in prev_landmarks.landmark[4:]])

curr_finger_pos = np.array([(lm.x * x, lm.y * y) for lm in hand_landmarks.landmark[4:]])

finger_movement = np.linalg.norm(curr_finger_pos - prev_finger_pos)

hand_movement = cv2.norm(hand_landmarks.landmark[0].x * x - prev_landmarks.landmark[0].x * x,

hand_landmarks.landmark[0].y * y - prev_landmarks.landmark[0].y * y)

if (finger_movement > 20 or hand_movement > 25) and np.array_equal(currWindow, im1):

char_position += char_speed

elif (finger_movement > 20 or hand_movement > 25) and np.array_equal(currWindow, im2):

isAlive = True

else:

print("Hand Movement Not Detected")

prev_landmarks = hand_landmarks

Note:- write this block under step 9th if the block

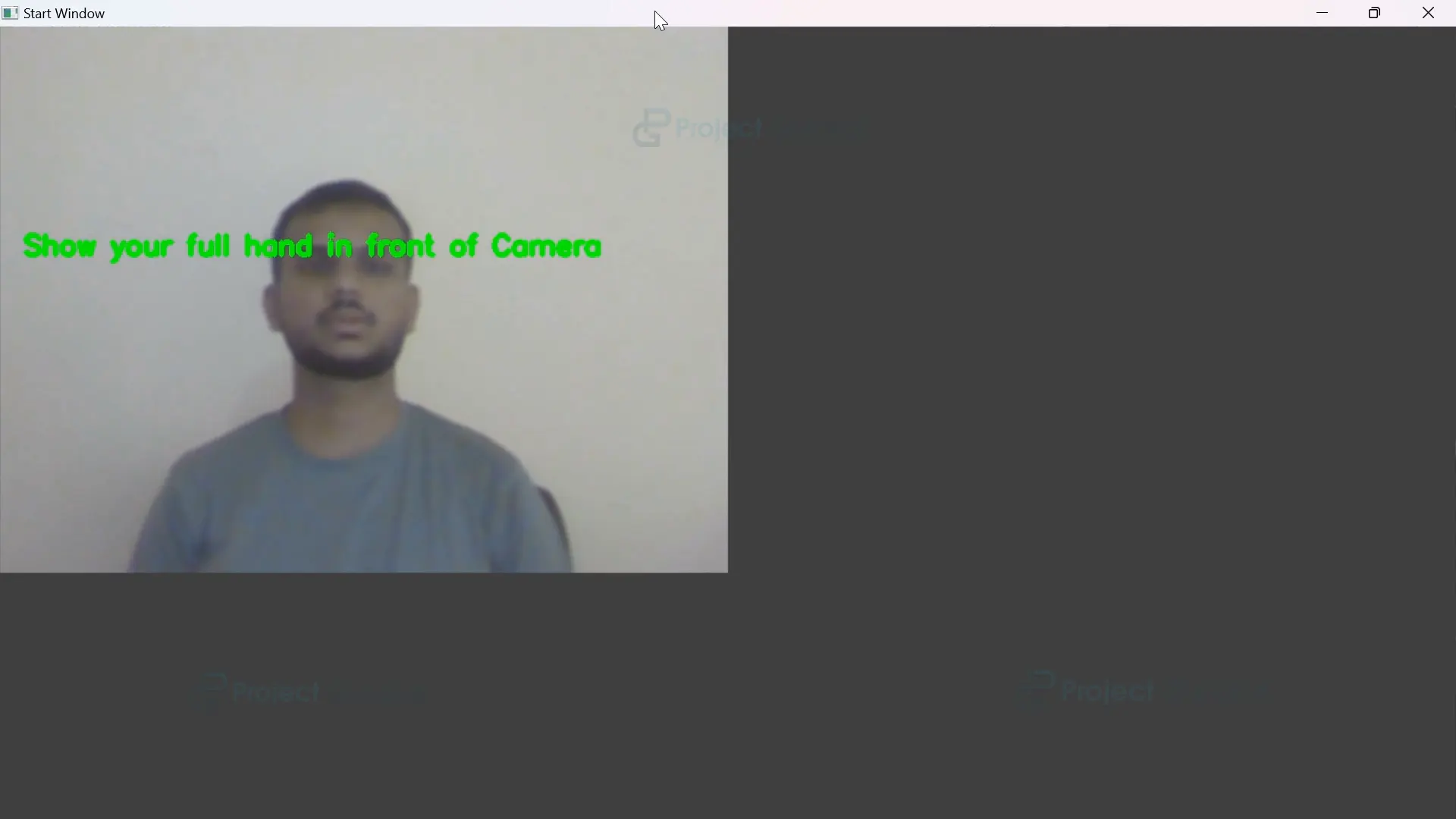

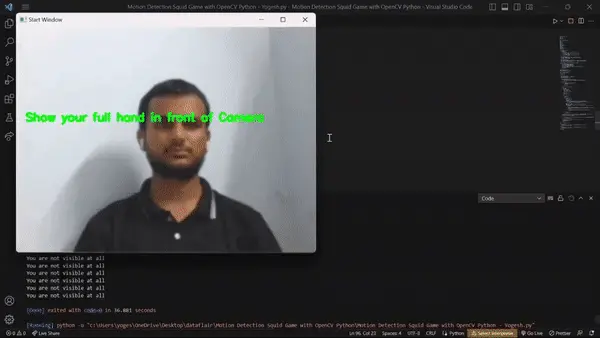

11. If no hand is detected, the code notifies the user to show their entire hand in front of the camera through a displayed message and console print. The hand_detected flag is set to False, previous landmark data is cleared, and the starting window is set.

else:

hand_detected = False

prev_landmarks = None

cv2.putText(frame, "Show your full hand in front of Camera", (20, 200), cv2.FONT_HERSHEY_SIMPLEX, 0.8,

(0, 255, 0), 4)

cv2.imshow("Start Window", frame)

currWindow = im1

print("Hand Not Detected")

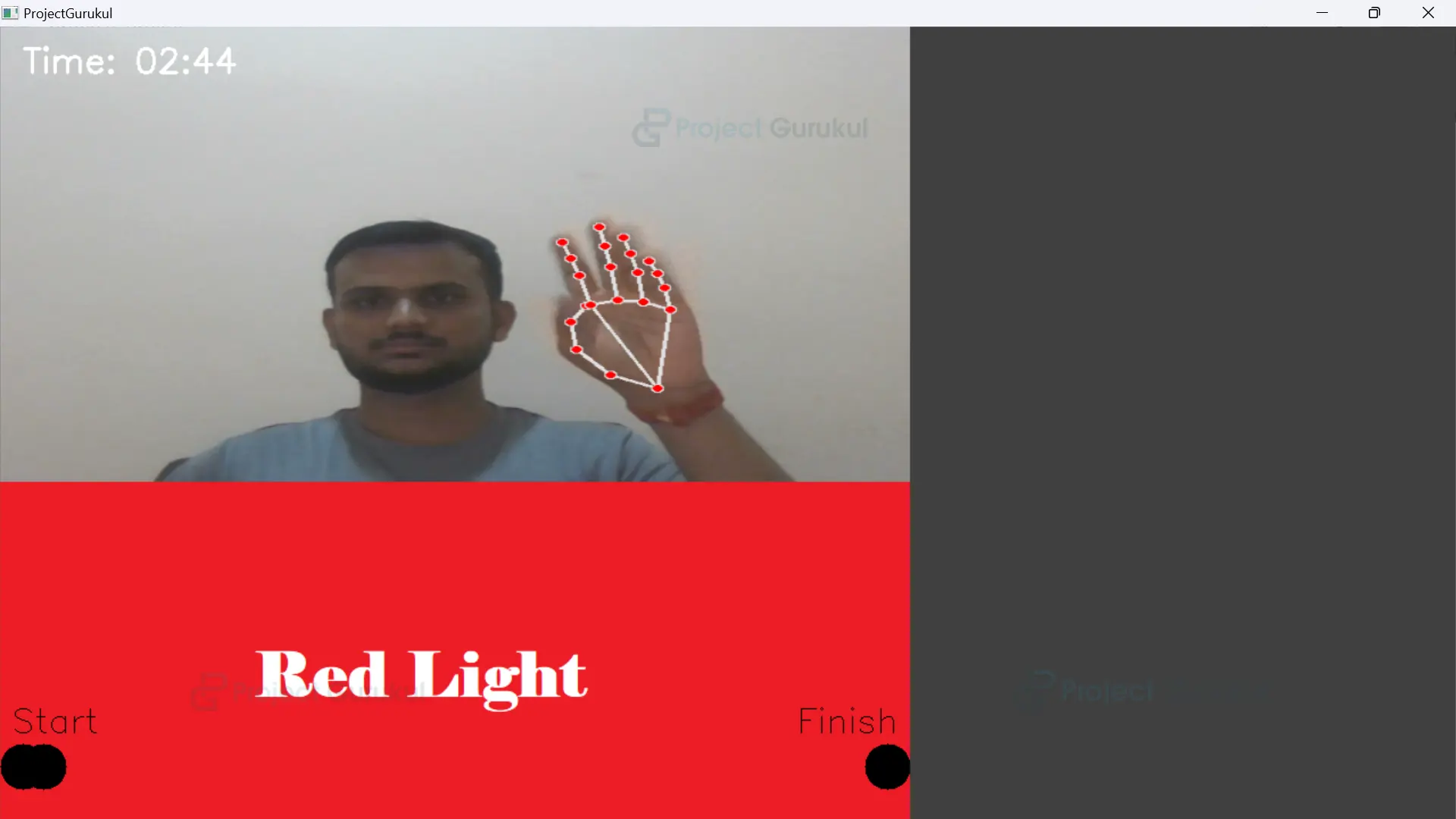

12. When a hand is detected, the code displays game elements on the screen, including start and finish points. It also shows the character’s position and prints its value to the console. The resulting image is then shown in a window titled “ProjectGurukul”.

if hand_detected:

mainWin = np.concatenate((cv2.resize(frame, (800, 400)), currWindow), axis=0)

cv2.circle(mainWin, (20, 650), 20, (0, 0, 0), -1)

cv2.putText(mainWin, "Start", (start_position[0] - 10, 630 - 10),

cv2.FONT_HERSHEY_SIMPLEX, 1, (0, 0, 0), 1)

cv2.circle(mainWin, (780, 650), 20, (0, 0, 0), -1)

cv2.putText(mainWin, "Finish", (finish_position[0] + 10, 630 - 10),

cv2.FONT_HERSHEY_SIMPLEX, 1, (0, 0, 0), 1)

cv2.circle(mainWin, (char_position, 650), 20, (0, 0, 0), -1)

print(char_position)

cv2.imshow("ProjectGurukul", mainWin)

13. When a character (a circular object) successfully reaches the finish position, then the player will be declared as Winner, and it will be displayed in the Result Window.

if char_position >= 770:

print("You are a winner!")

cv2.putText(mainWin, "Winner", (300, 400), cv2.FONT_HERSHEY_SIMPLEX, 2, (0, 255, 0), 3)

cv2.imshow("Result Window", mainWin)

cv2.waitKey(0)

break

Note:- Write this step under the 12th step if block.

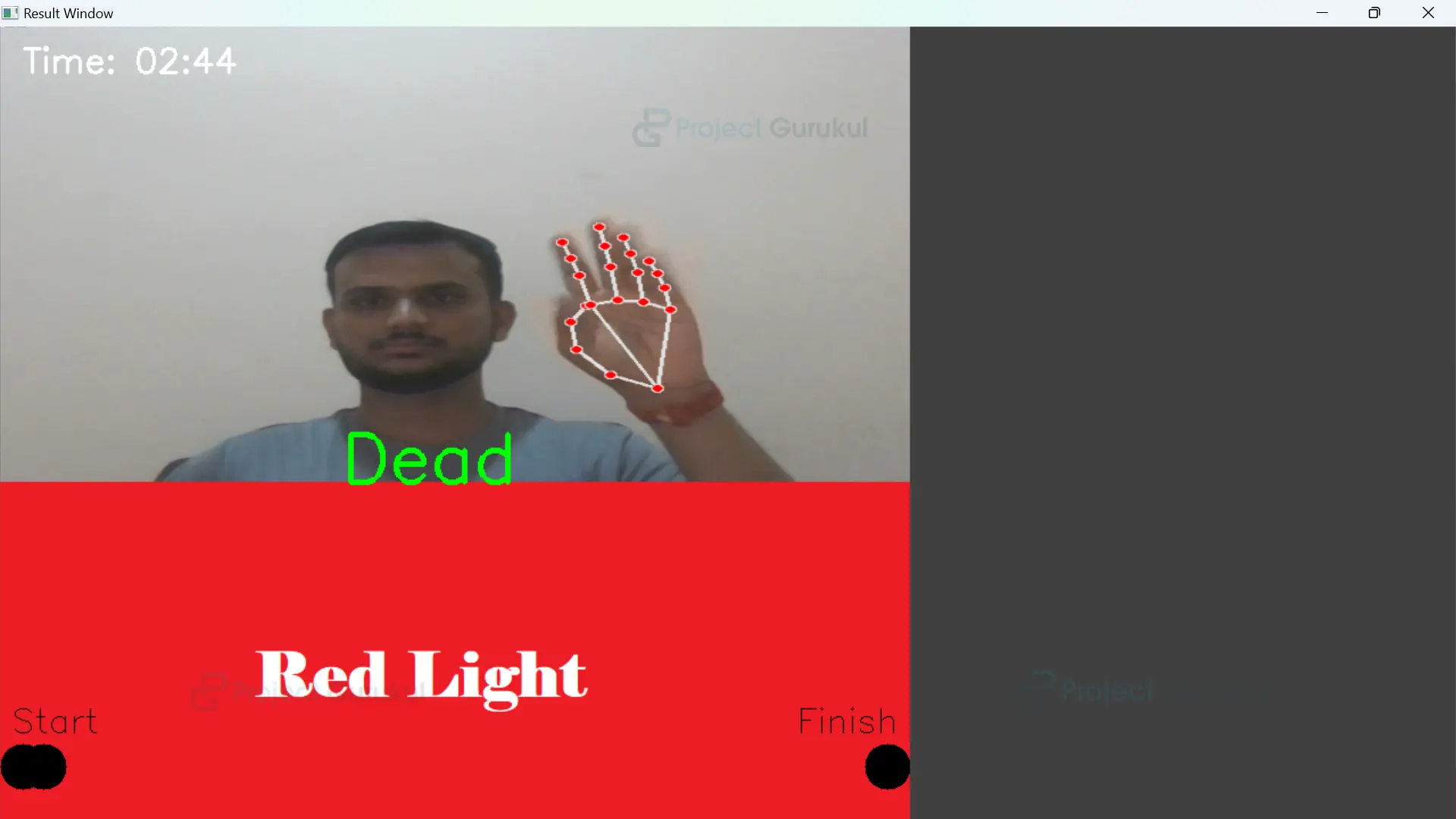

14. When the player shows hand movement, then isAlive will be true, and the player will be declared as Dead it will be shown in the Result window.

if isAlive:

print("You are Dead!")

cv2.putText(mainWin, "Dead", (300, 400), cv2.FONT_HERSHEY_SIMPLEX, 2, (0, 255, 0), 3)

cv2.imshow("Result Window", mainWin)

cv2.waitKey(0)

break

Note:- Write this step under the 12th step if block.

15. If the user presses the ‘q’ key, then the program will stop executing.

if cv2.waitKey(1) == ord('q'):

break

16. It releases all the resources occupied by the program once the program stops executing.

cap.release() cv2.destroyAllWindows()

Python OpenCV Motion Detection Squid Game Output

Python OpenCV Motion Detection Squid Game Video Output

Full Code

import cv2

import mediapipe as mp

import numpy as np

import time

import math

import random

mpHands = mp.solutions.hands

hands = mpHands.Hands(max_num_hands=1, min_detection_confidence=0.8)

mpDraw = mp.solutions.drawing_utils

cap = cv2.VideoCapture(0)

im1 = cv2.imread('green.png')

im2 = cv2.imread('red.png')

currWindow = im1

prev_landmarks = None

isAlive = False

start_time = time.time()

duration = random.uniform(0, 5)

start_position = (20, 800)

finish_position = (690, 650)

char_position = start_position[0]

char_speed = 3

hand_detected = False

# Timer variables

start_timer = None

timer_duration = 180 # 3 minutes in seconds

while True:

ret, frame = cap.read()

x, y, c = frame.shape

frame = cv2.flip(frame, 1)

framergb = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

result = hands.process(framergb)

if time.time() - start_time >= duration:

if currWindow is im1:

currWindow = im2

else:

currWindow = im1

start_time = time.time()

duration = random.uniform(3, 8)

if result.multi_hand_landmarks:

hand_detected = True

if start_timer is None:

start_timer = time.time()

hand_landmarks = result.multi_hand_landmarks[0]

mpDraw.draw_landmarks(frame, hand_landmarks, mpHands.HAND_CONNECTIONS)

if prev_landmarks is not None:

prev_finger_pos = np.array([(lm.x * x, lm.y * y) for lm in prev_landmarks.landmark[4:]])

curr_finger_pos = np.array([(lm.x * x, lm.y * y) for lm in hand_landmarks.landmark[4:]])

finger_movement = np.linalg.norm(curr_finger_pos - prev_finger_pos)

hand_movement = cv2.norm(hand_landmarks.landmark[0].x * x - prev_landmarks.landmark[0].x * x,

hand_landmarks.landmark[0].y * y - prev_landmarks.landmark[0].y * y)

if (finger_movement > 20 or hand_movement > 25) and np.array_equal(currWindow, im1):

char_position += char_speed

elif (finger_movement > 20 or hand_movement > 25) and np.array_equal(currWindow, im2):

isAlive = True

else:

print("Hand Movement Not Detected")

prev_landmarks = hand_landmarks

else:

hand_detected = False

start_timer = None

prev_landmarks = None

cv2.putText(frame, "Show your full hand in front of Camera", (20, 200), cv2.FONT_HERSHEY_SIMPLEX, 0.8,

(0, 255, 0), 4)

cv2.imshow("Start Window", frame)

currWindow = im1

print("Hand Not Detected")

if hand_detected:

elapsed_time = int(time.time() - start_timer)

remaining_time = max(0, timer_duration - elapsed_time)

mainWin = np.concatenate((cv2.resize(frame, (800, 400)), currWindow), axis=0)

cv2.putText(mainWin, f"Time: {remaining_time // 60:02d}:{remaining_time % 60:02d}", (20, 40),

cv2.FONT_HERSHEY_SIMPLEX, 1, (255, 255, 255), 2)

cv2.circle(mainWin, (20, 650), 20, (0, 0, 0), -1)

cv2.putText(mainWin, "Start", (start_position[0] - 10, 630 - 10),

cv2.FONT_HERSHEY_SIMPLEX, 1, (0, 0, 0), 1)

cv2.circle(mainWin, (780, 650), 20, (0, 0, 0), -1)

cv2.putText(mainWin, "Finish", (finish_position[0] + 10, 630 - 10),

cv2.FONT_HERSHEY_SIMPLEX, 1, (0, 0, 0), 1)

cv2.circle(mainWin, (char_position, 650), 20, (0, 0, 0), -1)

print(char_position)

cv2.imshow("ProjectGurukul", mainWin)

if char_position >= 770:

print("You are a winner!")

cv2.putText(mainWin, "Winner", (300, 400), cv2.FONT_HERSHEY_SIMPLEX, 2, (0, 255, 0), 3)

cv2.imshow("Result Window", mainWin)

cv2.waitKey(0)

break

if isAlive or remaining_time <= 0:

print("You are Dead!")

cv2.putText(mainWin, "Dead", (300, 400), cv2.FONT_HERSHEY_SIMPLEX, 2, (0, 255, 0), 3)

cv2.imshow("Result Window", mainWin)

cv2.waitKey(0)

break

if cv2.waitKey(1) == ord('q'):

break

cap.release()

cv2.destroyAllWindows()

Conclusion

In summary, It utilizes computer vision and hand gesture recognition to create an interactive game experience. By detecting and tracking the player’s hand movements, it allows them to control a character on the screen. The objective is to navigate the character from the start point to the finish point while avoiding obstacles. The program provides visual feedback and tests the player’s hand-eye coordination and responsiveness. Overall, it offers an engaging and challenging game that combines technology and user interaction.

Your 15 seconds will encourage us to work even harder

Please share your happy experience on Google | Facebook