Fake News Detection Project in Python with Machine Learning

FREE Online Courses: Elevate Your Skills, Zero Cost Attached - Enroll Now!

With our world producing an ever-growing huge amount of data exponentially per second by machines, there is a concern that this data can be false (or fake). Fake news (or data) can pose many dangers to our world. Imagine what happens if due to some false information you are given the wrong medicine.

Luckily, this problem can be addressed using machine learning. We can develop a machine learning model in python which can detect whether the news is fake or not

About Fake News Detection Project

In this machine learning project, we build a classifier that detects whether the news is fake or not.

This is a binary classification problem. We preprocess the text data from our dataset using TF-IDF Vectorizer. We apply the Multinomial Naive Bayes algorithm to the preprocessed text and train and evaluate our model on the dataset.

Fake News Dataset

The dataset for this python project contains two directories inside it. One contains the true (correct) news and the other contains the fake news. Summing both of them up, the total dataset comprises 44,898 instances.

Please download the fake news dataset: Download Fake News Dataset

Tools and Libraries:

In python fake news detection project, we use following libraries:

- Python – 3.x

- Pandas – 1.2.4

- Scikit-learn – 0.24.1

- NLTK – 3.6.2

To install above modules please run the following command:

pip install pandas scikit-learn nltk

Detecting Fake News with Python

To build a machine learning model to accurately classify news as REAL or FAKE.

Download Fake News Detection Python Code

Please download the source code of python fake news detection project: Fake News Detection Project Code

Steps to Build Fake News Classifier in Python

We will follow these steps to build the required fake news classifier.

- Perform Exploratory Data Analysis (EDA).

- Build the classifier model.

- Train and evaluate the model.

Step 1: Perform Exploratory Data Analysis (EDA):

Load the dataset using pandas.

import pandas as pd

true_df = pd.read_csv('./Desktop/ProjectGurukul/Fake News Detection/True.csv')

fake_df = pd.read_csv('./Desktop/ProjectGurukul/Fake News Detection/Fake.csv')

The fake news dataset doesn’t contain any target labels associated with it. So add the respective labels to the dataframes.

true_df['label'] = 0 fake_df['label'] = 1

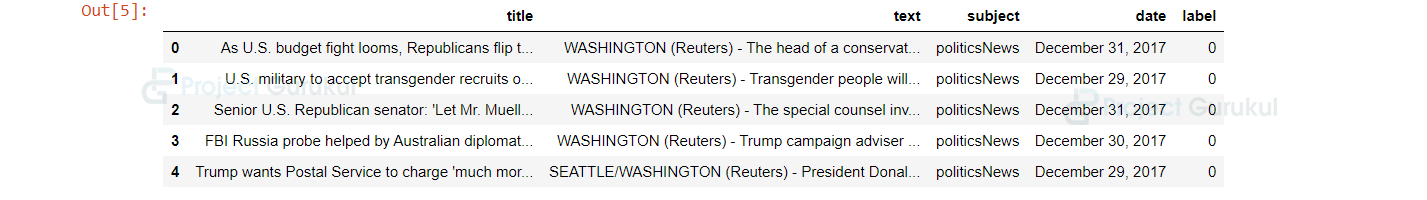

Let’s take a look at the datasets.

true_df.head()

Output:

fake_df.head()

Output:

If you observe, we only need the columns: text and label for our task. So, let’s drop the remaining.

true_df = true_df[['text','label']] fake_df = fake_df[['text','label']]

Next, merge both the dataframes into one using the merge() function.

dataset = pd.concat([true_df , fake_df])

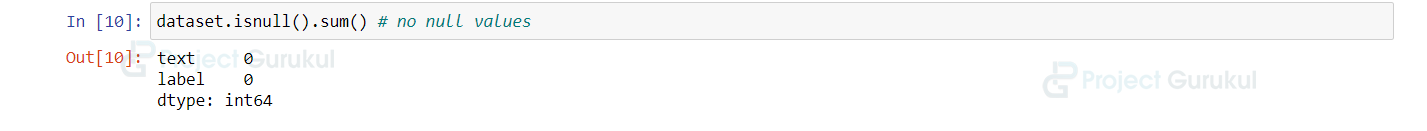

The next step in fake news detection project is to check for null values in the dataset and verify if it has a balanced distribution.

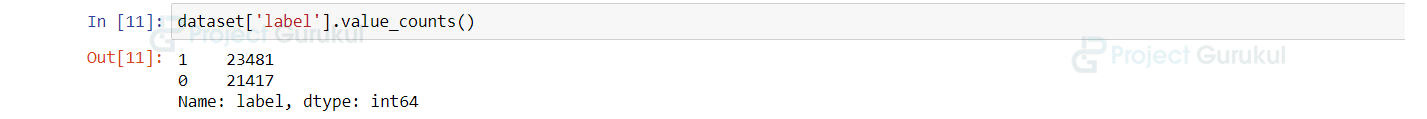

dataset['label'].value_counts()

Output:

You can see that our dataset has a good distribution of target labels.

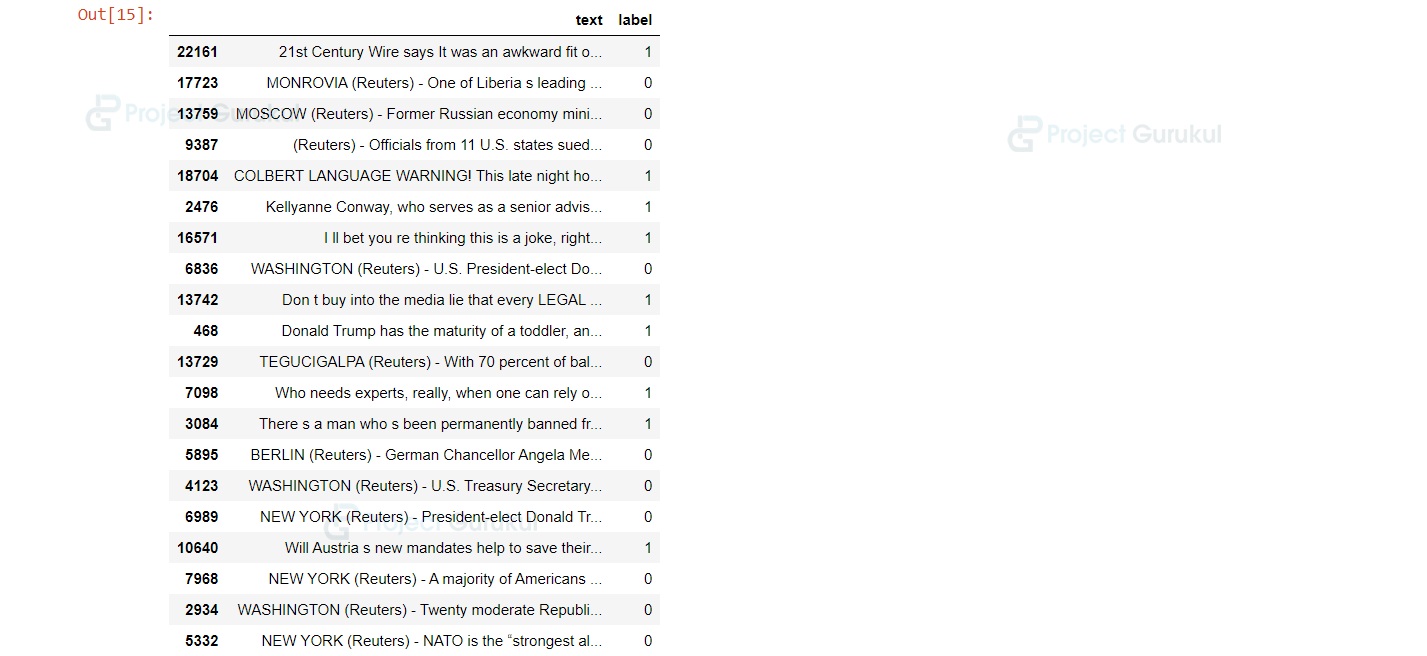

As we have concatenated the dataframes, we should shuffle the dataset. Else, our model learns in order and results in worse accuracy.

dataset = dataset.sample(frac = 1)

Let’s have a peek at the dataset

dataset.head(20)

Output:

The input to our machine learning model is text. The text cannot be interpreted by the algorithms directly. Instead, we preprocess our text data by converting it into lowercase, replacing all the non-alphabetic characters with white space (” “). Then we lemmatize the text meaning that we convert all the words to their base word or root word.

Here is an example of Lemmatization. All the words study, studied, studying are converted to their base word, study.

Also, we remove the stopwords from our text. Stopwords are the words a, an, the, etc. These words are in larger amounts and do not add anything to the computations.

import nltk

import re

from nltk.corpus import stopwords

from nltk.stem import WordNetLemmatizer

lemmatizer = WordNetLemmatizer()

stopwords = stopwords.words('english')

Let’s define a function to preprocess the text data.

def clean_data(text):

text = text.lower()

text = re.sub('[^a-zA-Z]' , ' ' , text)

token = row.split()

token = [lemmatizer.lemmatize(word) for word in token if not word in stopwords]

clean_news = ' '.join(news)

return clean_news

dataset['text'] = dataset['text'].apply(lambda x : clean_data(x))

Let’s convert this preprocessed text data into vectors using the TF-IDF Vectorizer.

Tf-Idf Vectorization is nothing but count vectorization (frequency of words in the text) followed by the Tf-Idf transformation. This algorithm is most commonly used to transform the text into some meaningful representation of numbers acceptable by the machine.

Term Frequency (TF) is the number of times a term/word occurs in the document divided by the number of words in the document.

Inverse Document Frequency (IDF) is the number of documents divided by the number of documents containing the word with log applied to it.

The value is the product of Term Frequency and the Inverse Document Frequency.

from sklearn.feature_extraction.text import TfidfVectorizer vectorizer = TfidfVectorizer(max_features = 50000 , lowercase=False , ngram_range=(1,2))

Let’s partition the X and y labels of the dataset.

X = dataset.iloc[:35000,0] y = dataset.iloc[:35000,1]

I’ve taken only some samples fake news dataset due to the memory and RAM issues.

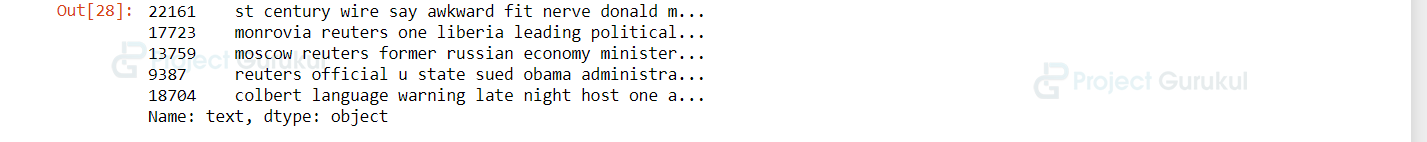

Let’s see the data stored in X and y

X.head()

Output:

y.head()

Output:

Step 2: Build the classifier model

Let’s split the data into an 80-20 ratio using the train_test_split() function.

from sklearn.model_selection import train_test_split train_X , test_X , train_y , test_y = train_test_split(X , y , test_size = 0.2 ,random_state = 0)

Now, convert the text data into vectors using the vectorizer defined above and convert them to pandas DataFrames.

vec_train = vectorizer.fit_transform(train_data) vec_train = vec_train.toarray() vec_test = vectorizer.transform(test_X).toarray() train_data = pd.DataFrame(vec_train , columns=vectorizer.get_feature_names()) test_data = pd.DataFrame(vec_test , columns= vectorizer.get_feature_names())

Let’s apply the Multinomial Naive Bayes algorithm to our dataset.

Multinomial Naive Bayes algorithm is generally the Naive Bayes algorithm applied to multinomial distribution data. The multinomial distribution means that with each trial there can be k >= 2 outcomes. This supervised classification algorithm is suitable for classifying discrete data like word counts of text.

This algorithm is chosen for fake news detection project because the Multinomial NB algorithm works pretty well with high-dimensional data. Also, the algorithm works well with text data.

from sklearn.naive_bayes import MultinomialNB clf = MultinomialNB()

Step 3: Train and Evaluate Fake News Detection Model

Let’s train the fake news detection model using the fit function and record the predictions made by the classifier.

from sklearn.metrics import accuracy_score,classification_report clf.fit(train_data, train_y) predictions = clf.predict(test_data)

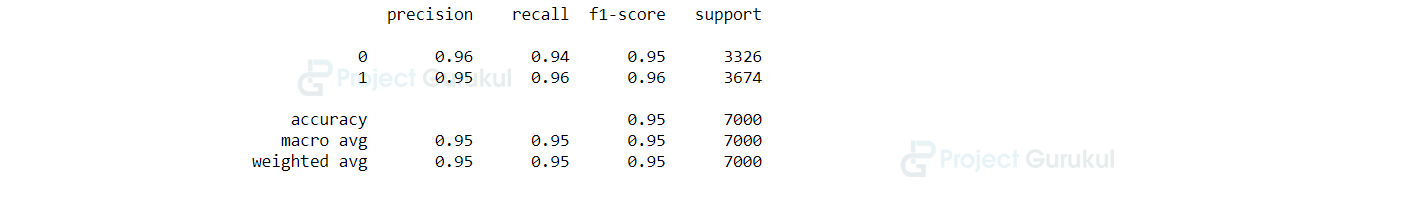

The classification report for our model is shown below.

print(classification_report(test_y , predictions))

Output:

We got an accuracy of 95% on our test dataset.

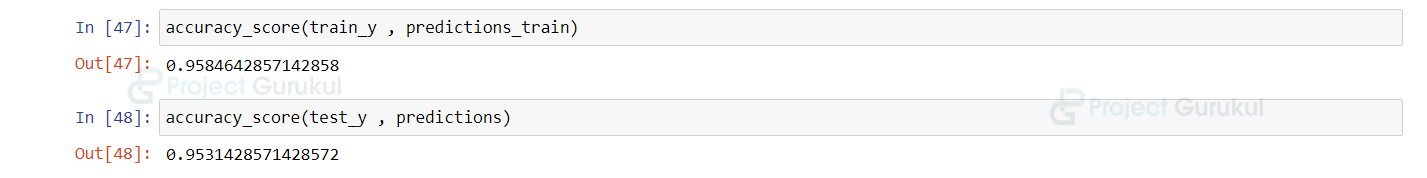

Let’s see the accuracy score of our model.

accuracy_score(train_y , predictions_train)

Output:

Summary:

In this machine learning project, we built a classifier model using the supervised machine learning algorithm to verify if the information is false (fake).

We applied the supervised Multinomial Naive Bayes algorithm in python fake news detection project and achieved 95% accuracy.

If you are Happy with ProjectGurukul, do not forget to make us happy with your positive feedback on Google | Facebook

I want fake News Detection modules

Please sir i want 2-3 modules for fake News Detection using python with machine learning